Overview

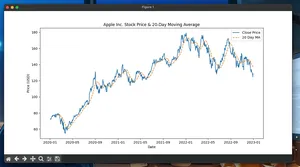

Use Python to compute key algorithmic trading metrics (CAGR, Sharpe, drawdown) and publish both raw PnL and summaries to Google Sheets. This guide shows a minimal working example and practical tips for reliable, fast updates.

What you’ll build:

- A Python script that generates or ingests strategy returns

- A metrics summary sheet and a PnL sheet in Google Sheets

- Efficient bulk writes and optional sharing

Quickstart

- Install packages

- pip install pandas numpy gspread google-auth

- Create a service account and key

- In your Google Cloud project, create a service account and a JSON key file

- Save the file locally (e.g., service_account.json)

- Enable API scopes in code

- Use spreadsheets and drive scopes to create and edit sheets

- Choose how to access a spreadsheet

- Easiest: let the script create a new spreadsheet the service account owns

- Or: create a Google Sheet manually and share it with the service account email

- Run the script

- The script writes PnL and metrics, prints the spreadsheet URL, and optionally shares it if GSHEETS_SHARE_WITH is set

Data model in Sheets

- PnL worksheet: Date, Return, Equity

- Summary worksheet: key metrics as name-value pairs

Minimal Working Example (Python)

import os

import numpy as np

import pandas as pd

import gspread

from math import sqrt

from google.oauth2.service_account import Credentials

# Config

SCOPES = [

"https://www.googleapis.com/auth/spreadsheets",

"https://www.googleapis.com/auth/drive",

]

SERVICE_ACCOUNT_FILE = "service_account.json" # Path to your JSON key

SHARE_WITH = os.getenv("GSHEETS_SHARE_WITH") # Optional: email to share the sheet with

# 1) Authenticate

creds = Credentials.from_service_account_file(SERVICE_ACCOUNT_FILE, scopes=SCOPES)

client = gspread.authorize(creds)

# 2) Create spreadsheet (service account will own it)

sh = client.create(pd.Timestamp.utcnow().strftime("Algo Results %Y%m%dT%H%M%SZ"))

# Optional: share so your user account can see it in Drive

if SHARE_WITH:

sh.share(SHARE_WITH, perm_type="user", role="writer")

# 3) Prepare worksheets

# Remove default sheet for cleanliness and add two named sheets

try:

sh.del_worksheet(sh.sheet1)

except Exception:

pass

ws_pnl = sh.add_worksheet(title="PnL", rows=2000, cols=10)

ws_summary = sh.add_worksheet(title="Summary", rows=50, cols=4)

# 4) Generate example daily returns (replace with your strategy output)

np.random.seed(42)

periods = 252 # ~1 trading year

mu, sigma = 0.0005, 0.01

returns = pd.Series(np.random.normal(mu, sigma, periods),

index=pd.bdate_range("2024-01-01", periods=periods))

def compute_metrics(returns: pd.Series, rf=0.0, periods_per_year=252):

returns = returns.dropna()

n = len(returns)

equity = (1 + returns).cumprod()

total_return = equity.iloc[-1] - 1.0

cagr = (1 + total_return) ** (periods_per_year / n) - 1.0 if n > 0 else np.nan

vol = returns.std() * sqrt(periods_per_year)

sharpe = ((returns.mean() * periods_per_year) - rf) / vol if vol > 0 else np.nan

downside = returns[returns < 0].std() * sqrt(periods_per_year)

sortino = ((returns.mean() * periods_per_year) - rf) / downside if downside > 0 else np.nan

rolling_max = equity.cummax()

drawdown = equity / rolling_max - 1.0

max_dd = drawdown.min() if not drawdown.empty else np.nan

win_rate = (returns > 0).mean()

avg_win = returns[returns > 0].mean()

avg_loss = returns[returns < 0].mean()

return {

"Observations": n,

"Total Return": total_return,

"CAGR": cagr,

"Volatility": vol,

"Sharpe": sharpe,

"Sortino": sortino,

"Max Drawdown": max_dd,

"Win Rate": win_rate,

"Avg Win": avg_win,

"Avg Loss": avg_loss,

"Final Equity": float(equity.iloc[-1]) if n else np.nan,

}, equity

metrics, equity_curve = compute_metrics(returns)

# 5) Write PnL table in one bulk update

pnl_values = [["Date", "Return", "Equity"]]

for dt, r in returns.items():

pnl_values.append([dt.strftime("%Y-%m-%d"), float(r), float((1 + returns.loc[:dt]).prod())])

ws_pnl.update("A1", pnl_values, value_input_option="RAW")

# 6) Write Summary as name-value pairs

summary_values = [["Metric", "Value"]]

for k, v in metrics.items():

summary_values.append([k, float(v) if pd.notna(v) else ""])

ws_summary.update("A1", summary_values, value_input_option="RAW")

print("Spreadsheet URL:", sh.url)

Notes:

- Replace the synthetic returns with your backtest/live PnL

- For live runs, append the latest row to PnL and recompute metrics

Updating With Live Results (incremental)

- Append new rows with worksheet.append_rows([[date, return, equity]])

- Recompute metrics on appended data; write only the Summary block

- Consider a “Latest” sheet for current status to avoid rewriting large ranges

Example append snippet:

# Assuming ws_pnl already references your PnL worksheet

from datetime import datetime

new_dt = datetime.utcnow().strftime("%Y-%m-%d")

new_ret = 0.002

# Compute new equity by reading just the last equity value

last_equity = float(ws_pnl.get("C2:C")[0][-1]) if ws_pnl.row_count > 1 else 1.0

new_equity = last_equity * (1 + new_ret)

ws_pnl.append_rows([[new_dt, new_ret, new_equity]], value_input_option="RAW")

Recommended layout

- PnL: raw daily or per-trade results

- Summary: compact metrics for dashboards and chart data sources

- Optional sheets:

- Params: strategy parameters and versions

- Trades: per-trade details if needed (separate from daily PnL)

Pitfalls and gotchas

- Auth/permissions: if you create a sheet with the service account, share it to your user email to see it in Drive

- Quotas: avoid per-cell updates; batch writes to rectangular ranges

- Timezones: standardize to UTC; store timestamps as ISO-8601 strings

- Numeric formats: Sheets may auto-format; write RAW and format in UI if needed

- Series gaps: NaNs break cumulative equity; forward-fill or drop as appropriate

- Date order: ensure ascending dates before cumulative calculations

- Large sheets: keep PnL lean; archive old rows to a second sheet

- Secrets: do not commit service_account.json; use environment variables and .gitignore

Performance notes

- Prefer ws.update("A1", values) with a 2D list over cell-by-cell updates

- Group multiple ranges with sh.batch_update for fewer round-trips

- Append only new rows for live runs; avoid rewriting the entire PnL

- Precompute metrics in Python; send only compact outputs to Sheets

- Cache: keep the last equity locally to compute incremental updates

- Network resilience: add retries with exponential backoff on 429/5xx

Tiny FAQ

Q: Do I need a Google Cloud project? A: Yes. Create a service account with a JSON key and enable Sheets/Drive.

Q: Can I write a pandas DataFrame directly? A: Convert to a 2D list with df.itertuples or df.values.tolist and use ws.update. Optional helpers exist but aren’t required.

Q: How do I stream live results safely? A: Buffer updates (e.g., every N trades or minutes), append in batches, and recompute summary. Respect API quotas.

Q: How do I control formats? A: Write RAW values and adjust formats in the Sheets UI or via batch_update with formatting requests if needed.

Q: Where should I compute metrics? A: In Python for speed and reproducibility. Use Sheets mainly for visualization and sharing.

Next steps

- Replace synthetic returns with your backtest/live outputs

- Add a Trades sheet and compute per-trade stats

- Build charts in Sheets referencing PnL and Summary