Overview

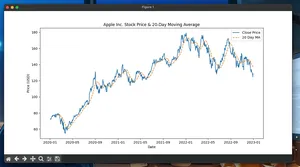

This guide shows how to use Python and PostgreSQL to store large volumes of stock/crypto market data (ticks or OHLCV) efficiently. You’ll get a schema, fast loaders, deduplication, and indexing strategies suited for research, backtesting, and live execution.

Quickstart

- Python 3.10+, PostgreSQL 14+.

- Install:

pip install psycopg[binary] pandas(pandas optional for CSV handling). - Prefer UTC timestamps, composite primary keys, and bulk loading.

Minimal working example (Python + Postgres)

The snippet below creates an OHLCV table, inserts rows with upsert, and queries counts per symbol.

# Minimal example: create table, insert OHLCV with upsert, query

import psycopg

from datetime import datetime, timezone, timedelta

DSN = "postgresql://postgres:postgres@localhost:5432/postgres"

ddl = """

CREATE TABLE IF NOT EXISTS ohlcv (

source text NOT NULL,

symbol text NOT NULL,

ts timestamptz NOT NULL,

open double precision NOT NULL,

high double precision NOT NULL,

low double precision NOT NULL,

close double precision NOT NULL,

volume double precision NOT NULL,

PRIMARY KEY (source, symbol, ts)

);

"""

now = datetime.now(timezone.utc).replace(microsecond=0)

rows = [

("binance", "BTCUSDT", now + timedelta(minutes=i), 30000+i, 30010+i, 29990+i, 30005+i, 1_000+i)

for i in range(5)

]

with psycopg.connect(DSN, autocommit=True) as conn:

with conn.cursor() as cur:

cur.execute(ddl)

cur.executemany(

"""

INSERT INTO ohlcv (source, symbol, ts, open, high, low, close, volume)

VALUES (%s,%s,%s,%s,%s,%s,%s,%s)

ON CONFLICT (source, symbol, ts) DO NOTHING

""",

rows,

)

cur.execute("SELECT symbol, COUNT(*) FROM ohlcv GROUP BY 1 ORDER BY 1")

for symbol, cnt in cur.fetchall():

print(symbol, cnt)

Schema design

A compact OHLCV table works for most strategies; trades/ticks can be added separately.

Columns (ohlcv):

| name | type | notes |

|---|---|---|

| source | text | data provider or exchange |

| symbol | text | e.g., AAPL, BTCUSDT |

| ts | timestamptz | bar start time in UTC |

| open | double precision | price |

| high | double precision | price |

| low | double precision | price |

| close | double precision | price |

| volume | double precision | base units traded |

Primary key: (source, symbol, ts) prevents duplicates and supports partition pruning.

Optional secondary indexes:

- (symbol, ts DESC) INCLUDE (open, high, low, close, volume) for fast recent-bar queries.

Trades (optional):

CREATE TABLE IF NOT EXISTS trades (

source text NOT NULL,

symbol text NOT NULL,

ts timestamptz NOT NULL,

price double precision NOT NULL,

size double precision NOT NULL,

side text,

PRIMARY KEY (source, symbol, ts, price, size)

);

Numbered steps: from zero to millions of rows

- Create the database and user with sufficient privileges.

- Create tables (DDL above). Use UTC consistently.

- Start with inserts + upsert for correctness.

- Switch to COPY (bulk load) for speed; stage, dedupe, and merge.

- Add indexes after initial load (or defer index creation until after big batches).

- Partition by time for retention and vacuuming efficiency.

- Automate via a loader script and a scheduler (cron, Airflow, etc.).

High-throughput loads with COPY + staging

For sustained ingestion, COPY is faster than INSERT. Load into an unlogged staging table, then merge to the target with dedupe.

-- One-time setup

CREATE UNLOGGED TABLE IF NOT EXISTS ohlcv_stage (LIKE ohlcv INCLUDING ALL);

# Fast load: COPY into staging, then merge with ON CONFLICT

import io

import psycopg

DSN = "postgresql://postgres:postgres@localhost:5432/postgres"

rows = [

("binance", "BTCUSDT", "2025-01-01T00:00:00Z", 30000, 30010, 29990, 30005, 1000),

("binance", "BTCUSDT", "2025-01-01T00:01:00Z", 30005, 30020, 30000, 30010, 900),

]

with psycopg.connect(DSN) as conn:

with conn.cursor() as cur:

with cur.copy(

"COPY ohlcv_stage (source, symbol, ts, open, high, low, close, volume) FROM STDIN WITH (FORMAT CSV)"

) as copy:

for r in rows:

copy.write_row(r)

# Merge to target with upsert

cur.execute(

"""

INSERT INTO ohlcv (source, symbol, ts, open, high, low, close, volume)

SELECT source, symbol, ts::timestamptz, open, high, low, close, volume

FROM ohlcv_stage

ON CONFLICT (source, symbol, ts) DO NOTHING;

TRUNCATE ohlcv_stage;

"""

)

conn.commit()

Notes:

- Unlogged staging speeds up writes (not crash-safe). Use only for transient loads.

- COPY avoids per-row overhead; 10x–100x faster than INSERT in bulk.

Time partitioning and retention

Native Postgres partitioning helps with data lifecycle and vacuuming throughput.

-- Partitioned parent

DROP TABLE IF EXISTS ohlcv_partitioned CASCADE;

CREATE TABLE ohlcv_partitioned (

LIKE ohlcv INCLUDING DEFAULTS INCLUDING CONSTRAINTS

) PARTITION BY RANGE (ts);

-- Create monthly partition (repeat per month)

CREATE TABLE IF NOT EXISTS ohlcv_2025_01 PARTITION OF ohlcv_partitioned

FOR VALUES FROM ('2025-01-01') TO ('2025-02-01');

-- Index per partition

CREATE INDEX IF NOT EXISTS ohlcv_2025_01_sym_ts ON ohlcv_2025_01 (symbol, ts);

Operational tips:

- Create partitions ahead of time (e.g., monthly) and drop old ones to enforce retention.

- Keep partitions moderately sized (1–20M rows) for efficient maintenance.

Query examples

- Latest N bars for a symbol:

SELECT *

FROM ohlcv

WHERE source = 'binance' AND symbol = 'BTCUSDT'

ORDER BY ts DESC

LIMIT 1000;

- Aggregate trades to 1-minute OHLCV (no extensions):

WITH t AS (

SELECT source, symbol,

date_trunc('minute', ts) AS bucket,

price, size

FROM trades

WHERE symbol = 'BTCUSDT' AND ts >= now() - interval '1 day'

)

SELECT source, symbol, bucket AS ts,

(ARRAY_AGG(price ORDER BY bucket, ts))[1] AS open,

MAX(price) AS high,

MIN(price) AS low,

(ARRAY_AGG(price ORDER BY bucket, ts DESC))[1] AS close,

SUM(size) AS volume

FROM t

GROUP BY source, symbol, bucket

ORDER BY ts;

Pitfalls to avoid

- Mixed timezones: always store UTC (timestamptz) and convert at the edge.

- Duplicates: enforce PRIMARY KEY and use ON CONFLICT DO NOTHING on merges.

- Floats vs money: use double precision for speed; use numeric for exact PnL if needed.

- Oversized transactions: batch COPY in chunks (e.g., 100k–1M rows) to control memory and lock times.

- Too many indexes: add only those that serve reads; build them after bulk loads when possible.

- Autovacuum starvation: high-ingest tables need tuned autovacuum or scheduled VACUUM/ANALYZE.

Performance notes

- Use COPY + staging for bulk; INSERT for trickle updates.

- Keep rows narrow; avoid JSON blobs for hot paths. Normalize reference data (symbols, exchanges).

- Partition by ts for large histories; drop partitions to purge old data.

- Favor sequential writes: group loads by partition/month.

- Enable synchronous_commit = off for staging loads if acceptable.

- For read-heavy backtests, create covering indexes and consider CLUSTER on (symbol, ts).

Tiny FAQ

Q: Should I store ticks or OHLCV? A: Store the highest granularity you need (ticks for microstructure research). Derive OHLCV from trades when required.

Q: How do I handle late or corrected data? A: Load into staging, then upsert into the target. For corrections, use ON CONFLICT ... DO UPDATE to overwrite affected bars.

Q: How big should batches be? A: Aim for 100k–1M rows per COPY batch depending on hardware and WAL settings.

Q: Can I speed up backtests? A: Use covering indexes, partition pruning (time filters), and fetch only needed columns. Cache recent slices in memory if strategy allows.

Q: Is PostgreSQL enough for HFT? A: For sub-millisecond latencies, you’ll likely need in-memory stores. Postgres is excellent for research, ETL, and many live-trading workloads.