Overview

Exit code 137 means your container was terminated by SIGKILL, most commonly due to the kernel OOM killer. In Docker terms, the container hit a memory limit (or the host ran out of memory) and was OOMKilled. This guide shows how to diagnose, reproduce, and fix it.

Quickstart: Diagnose and fix

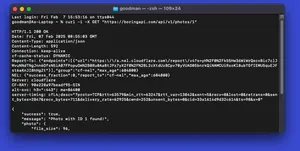

- Confirm OOMKilled

- docker inspect -f '{{.State.OOMKilled}} {{.State.ExitCode}}' <container>

- Check memory pressure

- docker stats <container>

- On Linux: docker exec <container> sh -c 'cat /sys/fs/cgroup/memory.max 2>/dev/null || cat /sys/fs/cgroup/memory/memory.limit_in_bytes'

- Short-term fixes

- Increase memory: --memory=512m (and optionally --memory-swap)

- Reduce process memory: lower concurrency, stream I/O, cap runtime heaps

- Long-term fixes

- Right-size limits in Compose/Swarm/Kubernetes

- Add memory reservation for smoother scheduling

- Profile memory and eliminate leaks

Minimal working example (reproduce and fix)

This example allocates memory until the container is OOMKilled, then shows how to fix it by adjusting limits.

Dockerfile:

FROM python:3.12-alpine

WORKDIR /app

COPY memory_hog.py .

CMD ["python", "memory_hog.py"]

memory_hog.py:

import time

chunks = []

chunk = b"x" * (64 * 1024 * 1024) # 64 MiB per allocation

while True:

chunks.append(bytearray(chunk))

print(f"Allocated ~{len(chunks) * 64} MiB")

time.sleep(0.2)

Build and run with a tight memory limit to reproduce OOMKilled:

# Build

docker build -t memhog:demo .

# Run with 128 MiB limit (will OOM)

docker run --name memhog --memory=128m memhog:demo || true

# Verify OOMKilled and exit code 137

docker inspect -f '{{.State.OOMKilled}} {{.State.ExitCode}}' memhog

# Cleanup

docker rm memhog

Fix by increasing memory and/or constraining behavior:

# Raise memory limit and disable swap by setting memory-swap == memory

docker run --name memhog --memory=512m --memory-swap=512m memhog:demo

Optional: soften pressure with a reservation (soft limit) when supported:

# 512 MiB hard limit, 256 MiB reservation

docker run --name memhog --memory=512m --memory-reservation=256m memhog:demo

Setting limits with Docker Compose

Compose (v2) generally honors deploy.resources; older docker-compose used mem_limit.

Compose v2+ (deploy.resources):

version: "3.8"

services:

app:

image: memhog:demo

deploy:

resources:

limits:

memory: 512M

reservations:

memory: 256M

Legacy docker-compose (mem_limit/mem_reservation):

version: "2.4"

services:

app:

image: memhog:demo

mem_limit: 512m

mem_reservation: 256m

How to tell why it died

- docker inspect -f '{{json .State}}' <container> shows OOMKilled true/false and ExitCode 137.

- docker events --since 30m often includes OOM events.

- docker stats shows live memory/limit; watch for usage nearing the limit.

Where to check vs command:

| Check | Command |

|---|---|

| OOMKilled flag | docker inspect -f '{{.State.OOMKilled}}' <ctr> |

| Exit code | docker inspect -f '{{.State.ExitCode}}' <ctr> |

| Live usage | docker stats <ctr> |

| Cgroup limit | docker exec <ctr> sh -c 'cat /sys/fs/cgroup/memory.max 2>/dev/null |

| Cgroup usage | docker exec <ctr> sh -c 'cat /sys/fs/cgroup/memory.current 2>/dev/null |

Note: If the host is under global memory pressure, the kernel may kill containers even if they did not hit their cgroup limit.

Common fixes

- Raise the hard limit

- docker run --memory=1g --memory-swap=1g image

- For Compose, set limits as shown above.

- Reduce memory demand

- Stream data instead of loading entire files into memory.

- Batch smaller, reuse buffers, avoid unbounded caches.

- Lower concurrency (threads, workers).

- Language/runtime tuning

- Java: set -Xmx respecting container limits (Java 10+ handles cgroups; older need -XX:+UseContainerSupport).

- Node.js: --max-old-space-size=512 (MiB) to cap V8 heap.

- Go: set GOMEMLIMIT=512MiB to bound GC target.

- Python: prefer generators, limit in-memory queues; consider smaller pandas chunksize.

- Reservations

- Use --memory-reservation to signal typical usage and reduce noisy neighbor risk.

- Swap (advanced)

- --memory-swap sets total memory+swap; must be >= --memory.

- Set --memory-swap equal to --memory to disable swap; -1 to allow unlimited swap (if host swap enabled).

- Be cautious: swap can hide issues and hurt latency.

Pitfalls

- Limits not applied where you think

- docker-compose v1 ignores deploy.resources; use mem_limit or upgrade to Compose v2.

- Units confusion

- 512m or 1g are binary MiB/GiB-like units; be consistent.

- Disabling OOM killer

- --oom-kill-disable can make the host unstable or hang the container; avoid in production.

- JVM/Node default heap sizes

- Defaults may exceed your container limit; explicitly set heap sizes.

- Docker Desktop memory cap

- On macOS/Windows, containers run in a VM; increase the Docker Desktop memory if needed.

- Host-level OOM

- If many containers share the host with no limits, any spike can trigger the host OOM killer; set limits for all services.

Performance notes

- Right-size memory

- Measure peak RSS at load; set --memory a bit above that, and reservation near typical usage.

- Detect leaks early

- Add memory metrics and alerts (RSS, heap, cache size) per service.

- Favor streaming and backpressure

- Use chunked I/O and bounded queues; avoid read-all patterns.

- GC tuning

- Go: tweak GOGC or GOMEMLIMIT for throughput/footprint trade-offs.

- JVM: profile live set; avoid oversizing caches; prefer G1/ZGC with container-aware settings.

- Avoid large layer loads

- Keep images lean to reduce baseline memory; disable debug symbols in production builds.

Verification checklist

- OOMKilled true and ExitCode 137 confirmed.

- Memory limit and reservation set as intended.

- Runtime heaps/concurrency tuned.

- docker stats shows headroom under expected load.

- No host-wide OOM events during load tests.

Tiny FAQ

- Why 137?

- 137 = 128 + 9 (process killed by SIGKILL).

- What about 143?

- 143 = 128 + 15 (SIGTERM), typically graceful shutdown.

- Why did it die below the limit?

- Host-wide OOM or short spikes beyond sampling; check cgroup usage files and host logs.

- Is swap a fix?

- It can smooth spikes but hurts latency. Prefer fixing memory usage or increasing RAM.

- Kubernetes?

- Map Docker --memory to resources.limits.memory and use requests for reservations; same OOMKilled semantics.