Overview

In Docker and BuildKit, the messages "context canceled" or "rpc error: code = Canceled" mean the build’s controlling context was terminated. This is not a specific step failure; it’s a cancellation signal. Typical causes:

- Client-side cancellation (Ctrl+C, terminal disconnect, CI job timeout)

- Docker daemon or BuildKit worker restarted

- Network issues (registry, proxy, DNS, VPN drops) during pull/push

- Resource pressure (OOM kill, disk full, inode exhaustion)

- Oversized build context or slow I/O causing upstream timeouts

This guide focuses on Docker/BuildKit and Docker Buildx in local and CI/CD environments.

Quickstart (get unstuck fast)

- Re-run with plain logs

- Command:

docker build --progress=plain --no-cache . - If using buildx:

docker buildx build --progress=plain --no-cache .

- Check the daemon/BuildKit logs

- Native daemon:

journalctl -u docker(Linux) or Docker Desktop logs - Buildx container driver:

docker ps | grep buildkitthendocker logs <builder-container>

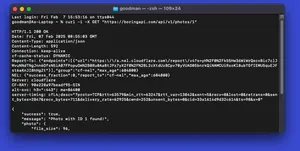

- Validate network and registry access

docker pull <base-image>to isolate pull failures- Re-login if pushing:

docker login <registry>

- Free resources

- Disk:

docker system dfanddf -h(free space); prune if needed:docker system prune -af --volumes - Memory/CPU: reduce parallel CI jobs; ensure runner has headroom

- Recreate or switch the builder

docker buildx lsdocker buildx rm <name>thendocker buildx create --use --driver docker-container- Bootstrap:

docker buildx inspect --bootstrap

- Retry with the classic builder (diagnostic)

DOCKER_BUILDKIT=0 docker build .- If this works, issues likely relate to BuildKit, network, or builder container lifecycle

Minimal working example (MWE)

Dockerfile (simulates a long-running step):

FROM busybox:1.36

RUN echo start && sleep 30 && echo done

Build and reproduce cancellation:

# Start a build (in terminal A)

docker build -t test-cancel .

# While it runs, press Ctrl+C (or close the terminal) to simulate client cancel

# You will typically see: "context canceled" or "rpc error: code = Canceled"

Now apply the Quickstart fixes above and rebuild normally.

Common causes and targeted fixes

| Signal you see | Likely cause | What to try |

|---|---|---|

| context canceled right after Ctrl+C | User or CI canceled | Don’t cancel; run in tmux/screen in SSH; increase CI timeouts |

| rpc error: code = Canceled during pull | Network/DNS/proxy | Test docker pull; set reliable DNS; configure proxy env; retry |

| Canceled during push/export | Registry auth/token or network | docker login; avoid flaky VPN; retry with --progress=plain |

| Canceled mid-build randomly | Daemon/buildkit restart or OOM | Check logs; ensure RAM; avoid daemon restarts; stable builder |

| Fails on large contexts | Slow send, socket timeout | Shrink context using .dockerignore; avoid sending node_modules |

| CI-only failures | Job timeouts/runner cleanup | Increase job timeout; pin buildx version; persistent builder cache |

Step-by-step troubleshooting

- Confirm it’s a cancellation

- Look for the exact strings: "context canceled" or "rpc error: code = Canceled"

- If a step error exists before that, fix that root cause first

- Inspect builder health

- Buildx:

docker buildx ls(is your builder running?) - Restart builder:

docker buildx rm <name>;docker buildx create --use --driver docker-container;docker buildx inspect --bootstrap

- Check daemon and system logs

- Linux daemon:

journalctl -u docker -n 200 - Look for restarts, OOM kills, or storage/overlayfs errors

- Verify resources

- Disk usage:

docker system df;df -handdf -i - Free space and inodes; prune aggressively if safe:

docker system prune -af --volumes

- Test network and registry

- Pull the base image separately:

docker pull <image> - If using a proxy/VPN, test without it; set HTTP(S)_PROXY build args only if needed

- Retry with

--network hostin trusted environments to rule out DNS issues

- Stabilize auth when pushing

- Re-login:

docker login <registry> - For CI, ensure tokens don’t expire mid-build; refresh or shorten build duration

- Reduce context size and I/O

- Create a .dockerignore that excludes large or irrelevant directories (e.g., .git, build outputs, node_modules when not needed)

- Consider multi-stage builds to keep layers minimal

- Isolate BuildKit vs classic

DOCKER_BUILDKIT=0 docker build .to test- If classic succeeds but BuildKit fails, keep BuildKit but:

- Recreate builder

- Avoid daemon restarts during builds

- Reduce network complexity (no flaky proxies)

- Pin and persist builder in CI

- Create a named builder once per runner and reuse it:

docker buildx create --name ci-builder --driver docker-container --use

docker buildx inspect --bootstrap

- Avoid job steps that prune or remove the builder container mid-pipeline

- Re-run with detailed progress

docker buildx build --progress=plain .to see which step was active

Useful configuration snippets

Set reliable DNS for the Docker daemon (Linux):

{

"dns": ["1.1.1.1", "8.8.8.8"],

"features": { "buildkit": true }

}

Apply and restart the daemon after editing daemon.json.

Pitfalls

- Closing SSH session without tmux/screen detaches the client and cancels the build

- CI steps that run

docker system prune -afwhile a build is running can remove the builder and cancel - Aggressive antivirus or filesystem watchers can slow I/O, hitting timeouts

- Very large contexts over remote Docker Engine connections are prone to socket resets

Performance notes

- Use .dockerignore to reduce sent context; this speeds up builds and avoids transport timeouts

- Multi-stage builds reduce layer size and push time; fewer bytes means fewer cancellation windows

- Reuse a persistent buildx builder with local cache mounts to shrink rebuild times

- Avoid unnecessary

--no-cacheafter the initial diagnostic; leverage cache for stability - If network is flaky, prefer

--loadlocally and push in a separate, retry-capable step

Tiny FAQ

Q: Is this a Docker bug? A: Usually no. It’s a cancellation propagated via gRPC when the client, daemon, or builder stops.

Q: Why only in CI? A: CI often cancels on timeouts or parallel job cleanup. Increase timeouts and keep a persistent builder.

Q: How do I know if it’s OOM? A: Check system and daemon logs for OOM kill messages. Reduce parallel builds or increase memory.

Q: Can I make builds resilient to network flaps? A: Keep layers small, use retries in the push step, and ensure stable DNS/proxy settings.