Overview

If you see: "Error response from daemon: Container is restarting, wait until the container is running" when running docker exec, the container’s main process keeps crashing and Docker’s restart policy is bringing it back up repeatedly. You cannot exec into a container while it’s in the Restarting state. Fix the root cause, or start the container in a stable state for debugging.

This guide shows fast diagnostics, a minimal example, and practical recovery steps.

Quickstart: fix it fast

Identify the container state

- Run:

docker ps -a --filter name=<name> - Check:

docker inspect -f '{{.State.Status}} {{.State.Restarting}} {{.State.ExitCode}}' <name>

- Run:

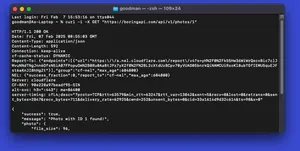

Read the logs

docker logs --tail=200 <name>- If logs scroll fast, follow:

docker logs -f <name>

Temporarily disable restart policy and stop

docker update --restart=no <name>docker stop <name>- Now the container will stay Exited for inspection.

Reproduce the crash interactively to see the error

docker start -ai <name>(attach to PID 1) to capture startup stderr.

Debug with a shell by overriding entrypoint

docker run --rm -it --entrypoint sh <image>- Re-add needed env vars and mounts to mirror the crashed container while you debug.

Fix configuration and recreate

- Adjust command, config, env, or volumes.

docker rm <name>anddocker run ...with corrected settings (ordocker compose up -d).

Minimal reproducible example

This simulates a crash loop that triggers the error.

Dockerfile:

FROM alpine:3.20

# Simulate a startup failure

CMD ["sh", "-c", "echo starting; exit 1"]

Commands:

# Build and run with a restart policy

docker build -t crash-img .

docker run -d --name crash --restart=always crash-img

# Exec will fail while container restarts

docker exec -it crash sh # → Error: Container is restarting

# Diagnose

docker ps -a

docker logs --tail=50 crash

# Stabilize for debugging

docker update --restart=no crash

docker stop crash

docker start -ai crash # shows the startup error

# or drop into a shell without running the crashing CMD

docker run --rm -it --entrypoint sh crash-img

Why it’s restarting (common causes)

- Wrong command or entrypoint: typo, missing binary, bad flags.

- Missing env vars or secrets: application exits on configuration error.

- Unavailable services: database/queue not reachable, app exits.

- Permission or path errors: volume mount points differ from expectations.

- Port conflicts or resource limits: process fails on bind/alloc.

- Application bug/panic: crashes immediately after start.

Note: Docker does not restart a container just because it is Unhealthy; it restarts only when the main process exits and a restart policy is set.

Recovery techniques

1) Disable restart policy to inspect

docker update --restart=no <container>

docker stop <container>

# Now it remains Exited; you can read historical logs and state.

docker logs <container>

2) Attach to PID 1 to capture startup errors

docker start -ai <container>

# Watch the exact failure printed by the main process.

3) Start a shell instead of the crashing command

- One-off debug container from the same image:

docker run --rm -it \

--name debug \

--entrypoint sh \

-e VAR1=... -v /host/path:/container/path:rw \

<image>

- In Compose:

docker compose run --rm --no-deps --entrypoint sh <service>

Use the same env and mounts as the failing container to reproduce.

4) Inspect config, env, and mounts

# Summaries

docker inspect -f '{{.Config.Entrypoint}} {{.Config.Cmd}}' <container>

docker inspect -f '{{range .Config.Env}}{{println .}}{{end}}' <container>

docker inspect -f '{{.HostConfig.Binds}}' <container>

Fix mismatches (paths, permissions, missing files, wrong env values).

5) Override command to keep it alive for exec

If you need a running target for exec while you investigate:

docker run -d --name crashdebug \

--entrypoint sh <image> -c 'sleep infinity'

# Now you can docker exec -it crashdebug sh

Mirror volumes and env from the failing container so the environment matches.

6) Recreate cleanly

Once fixed, recreate instead of mutating:

docker rm -f <container>

# Run or compose with corrected args

# docker run ...

# or

# docker compose up -d

Quick symptom-to-action map

| Symptom | Action |

|---|---|

docker exec says "Container is restarting" | Disable restart policy, stop, inspect logs; or run with shell entrypoint. |

Status: Restarting (X) seconds in docker ps | docker logs -f, docker start -ai, review command/entrypoint. |

| No useful logs | Attach with docker start -ai, increase app verbosity, verify entrypoint path. |

| Works locally but not with volumes | Check mount paths and permissions; ensure expected files exist. |

Pitfalls

- Trying to exec into a Restarting container will never work; exec requires Running state.

- Healthchecks don’t trigger restarts by themselves; look for an exiting PID 1.

- PID 1 signal handling: some apps ignore SIGTERM; use a proper init or

--init. - Changing a container’s filesystem is ephemeral; rebuild the image with fixes.

- Restart loops can mask the real error message; use

docker start -aito see it.

Performance notes

- Restart backoff: Docker increases delay between restarts up to ~1 minute. This reduces thrash but burns resources while looping.

- Log volume: crash loops can flood logs and disk. Use log rotation:

docker run \

--log-driver json-file \

--log-opt max-size=10m --log-opt max-file=3 \

...

- Resource waste: loops consume CPU, IO, and orchestrator retries. Pause by disabling restart until fixed.

FAQ

Why can’t I exec into a restarting container?

- Exec requires the container to be in Running state; during restart it’s not.

Can I just wait until it’s running and exec quickly?

- You can try, but if it immediately crashes you’ll race. Stabilize with a shell entrypoint instead.

Does an Unhealthy healthcheck cause restarts?

- Not in plain Docker. Restarts happen when PID 1 exits and a restart policy is set.

How do I see the exact startup error?

- Use

docker start -ai <container>to attach to PID 1 and view stderr at startup.

- Use

Should I change the container in place to fix it?

- Prefer fixing the image/config and recreating the container for reproducibility.