Overview

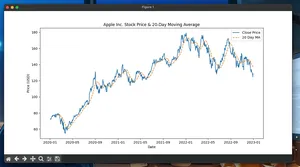

Double-top (M-shape) and double-bottom (W-shape) patterns are classic reversal setups. This guide shows how to detect them from OHLC close prices using pandas and numpy, with clear validation rules and breakout confirmation suitable for backtesting or signal generation.

Highlights:

- Swing-point detection via local extrema

- Strict tolerance and separation rules

- Optional breakout confirmation beyond the neckline

- Backtest-ready outputs (timestamps and indices)

Quickstart

- Install dependencies: pandas and numpy.

- Copy the detection functions below into your project.

- Feed a price series (e.g., close prices) and tune parameters.

- Use returned patterns DataFrame to create entry/exit rules in a backtest.

Minimal working example

import numpy as np

import pandas as pd

# --- Swing detection ---

def find_swings(series: pd.Series, w: int = 3, prominence: float = 0.005) -> pd.DataFrame:

"""Identify local peaks/troughs using a centered window of size w on each side.

Signals are confirmed with w-bar lag (no peeking if used post hoc).

prominence is relative to price (e.g., 0.005 = 0.5%).

Returns DataFrame with columns: timestamp, type ('peak'/'trough'), price, pos (int index).

"""

s = series.astype(float)

arr = s.values

n = len(s)

swings = []

for i in range(w, n - w):

p = arr[i]

lmax = arr[i - w:i].max()

rmax = arr[i + 1:i + 1 + w].max()

lmin = arr[i - w:i].min()

rmin = arr[i + 1:i + 1 + w].min()

# peak

if p > lmax and p >= rmax and (p - max(lmax, rmax)) >= prominence * p:

swings.append((s.index[i], 'peak', float(p), i))

continue

# trough

if p < lmin and p <= rmin and (min(lmin, rmin) - p) >= prominence * p:

swings.append((s.index[i], 'trough', float(p), i))

return pd.DataFrame(swings, columns=['timestamp', 'type', 'price', 'pos'])

# --- Pattern detection ---

def detect_double_patterns(

series: pd.Series,

w: int = 3,

prominence: float = 0.005,

peak_tolerance: float = 0.01,

min_separation: int = 5,

max_width: int = 60,

confirm_breakout: bool = True,

max_breakout_bars: int = 10,

) -> pd.DataFrame:

"""Detect double-top and double-bottom patterns.

- peak_tolerance: max relative difference between the two tops/bottoms (e.g., 0.01 = 1%).

- min_separation: minimum bars between first and second top/bottom.

- max_width: maximum bars from first to second top/bottom.

- confirm_breakout: require close beyond neckline within max_breakout_bars.

Returns DataFrame with pattern rows and indices.

"""

s = series.astype(float)

swings = find_swings(s, w=w, prominence=prominence)

sw = swings.reset_index(drop=True)

patterns = []

for a in range(len(sw) - 2):

t1 = sw.loc[a, 'type']

idx1 = int(sw.loc[a, 'pos'])

v1 = float(sw.loc[a, 'price'])

# Double Top: peak -> trough -> peak

if t1 == 'peak' and a + 1 < len(sw) and sw.loc[a + 1, 'type'] == 'trough':

idx_mid = int(sw.loc[a + 1, 'pos'])

v_mid = float(sw.loc[a + 1, 'price'])

for b in range(a + 2, len(sw)):

if sw.loc[b, 'type'] != 'peak':

continue

idx2 = int(sw.loc[b, 'pos'])

v2 = float(sw.loc[b, 'price'])

if idx2 - idx1 < min_separation:

continue

if idx2 - idx1 > max_width:

break

similar_tops = abs(v2 - v1) / max(v1, v2) <= peak_tolerance

clear_valley = (min(v1, v2) - v_mid) >= prominence * v_mid

if similar_tops and clear_valley:

neckline = v_mid

breakout_ts = None

if confirm_breakout and idx2 + 1 < len(s):

look_to = min(len(s) - 1, idx2 + max_breakout_bars)

post = s.iloc[idx2 + 1:look_to + 1]

hits = np.where(post.values < neckline)[0]

if hits.size > 0:

breakout_ts = post.index[int(hits[0])]

patterns.append({

'pattern': 'double_top',

'p1': s.index[idx1],

'mid': s.index[idx_mid],

'p2': s.index[idx2],

'neckline': neckline,

'breakout': breakout_ts,

})

break # take first valid right peak

# Double Bottom: trough -> peak -> trough

if t1 == 'trough' and a + 1 < len(sw) and sw.loc[a + 1, 'type'] == 'peak':

idx_mid = int(sw.loc[a + 1, 'pos'])

v_mid = float(sw.loc[a + 1, 'price'])

for b in range(a + 2, len(sw)):

if sw.loc[b, 'type'] != 'trough':

continue

idx2 = int(sw.loc[b, 'pos'])

v2 = float(sw.loc[b, 'price'])

if idx2 - idx1 < min_separation:

continue

if idx2 - idx1 > max_width:

break

similar_bottoms = abs(v2 - v1) / max(v1, v2) <= peak_tolerance

clear_peak = (v_mid - max(v1, v2)) >= prominence * v_mid

if similar_bottoms and clear_peak:

neckline = v_mid

breakout_ts = None

if confirm_breakout and idx2 + 1 < len(s):

look_to = min(len(s) - 1, idx2 + max_breakout_bars)

post = s.iloc[idx2 + 1:look_to + 1]

hits = np.where(post.values > neckline)[0]

if hits.size > 0:

breakout_ts = post.index[int(hits[0])]

patterns.append({

'pattern': 'double_bottom',

'p1': s.index[idx1],

'mid': s.index[idx_mid],

'p2': s.index[idx2],

'neckline': neckline,

'breakout': breakout_ts,

})

break

return pd.DataFrame(patterns)

# --- Demo with synthetic data ---

np.random.seed(7)

N = 250

base = 100 + np.cumsum(np.random.normal(0, 0.25, N))

# Inject a plausible double-top around t ~ 80

base[70:86] = np.array([103, 105, 108, 110, 111, 109, 106, 104, 106, 109, 111, 110, 108, 105, 102, 100])

# Inject a double-bottom around t ~ 170

base[160:176] = np.array([98, 96, 95, 96, 98, 97, 95, 94, 95, 97, 98, 99, 100, 101, 102, 104])

prices = pd.Series(base, index=pd.RangeIndex(start=0, stop=N, step=1), name='close')

patterns = detect_double_patterns(

prices,

w=3,

prominence=0.006,

peak_tolerance=0.015,

min_separation=4,

max_width=40,

confirm_breakout=True,

max_breakout_bars=10,

)

print(patterns)

How it works

- Find swings:

- A point is a peak if it exceeds the max of the previous w and next w bars by at least prominence.

- A point is a trough if it is below the min of the previous w and next w bars by at least prominence.

- Using a centered window confirms swings with w-bar lag (prevents look-ahead in backtests).

- Candidate formation:

- Double top = peak → trough → peak; Double bottom = trough → peak → trough.

- Validation rules:

- Similar tops/bottoms within peak_tolerance (e.g., 1–2%).

- Clear midpoint (valley/peak) depth/height vs. prominence.

- Bars between the two tops/bottoms within [min_separation, max_width].

- Breakout confirmation (optional):

- Double top: close breaks below neckline (the intervening trough).

- Double bottom: close breaks above neckline (the intervening peak).

Core parameters

| Parameter | Meaning | Typical values |

|---|---|---|

| w | Half-window for swing detection | 2–5 |

| prominence | Relative prominence per swing | 0.3%–1% |

| peak_tolerance | Allowed diff between two tops/bottoms | 0.5%–2% |

| min_separation | Min bars between first and second top/bottom | 3–10 |

| max_width | Max bars from first to second | 20–80 |

| max_breakout_bars | Bars to wait for breakout | 5–20 |

Backtest integration tips

- Signal timing: trigger entries at the close of the breakout bar to avoid peeking.

- Stops/targets: classic approach uses the height from top to neckline (or neckline to bottom) as a target; place a stop above/below the right top/bottom.

- De-duplication: patterns can overlap. Keep the earliest valid breakout and suppress overlapping detections that reuse the same bars.

- Multi-timeframe: detect on higher timeframe, execute on lower to reduce noise.

Performance notes

- Complexity: O(n) for swing detection plus linear scan of swings; suitable for millions of bars.

- Speed-ups:

- Use smaller w and reasonable prominence to reduce swing count.

- Batch process with numpy arrays (already used). For more speed, JIT with numba on loops.

- If you already have a peak detector (e.g., from previous stages), feed its results instead of scanning all bars.

- Memory: works in streaming by processing incrementally and keeping a rolling buffer of the last ~max_width bars and recent swings.

Pitfalls and gotchas

- Look-ahead bias: centered windows confirm swings with delay. Only act after confirmation bars exist.

- Overfitting thresholds: too-tight tolerance or too-large prominence reduces detections; too-loose yields noise.

- Data quality: adjust for splits/dividends; use clean close series.

- Regime dependence: patterns break more often in strong trends; consider trend filters (e.g., moving averages) before acting on reversals.

- Duplicate swings: volatile periods can generate multiple nearby extremes. Use min_separation and post-processing to merge.

FAQ

- Does this require OHLC? No—close-only is enough for detection here. Using highs/lows can improve swing detection.

- Real-time use? Yes, with lag: a swing is only confirmed after w future bars. For immediate detection, use one-sided logic but account for more false positives.

- Intraday data? Works the same; tune prominence, separation, and width to typical intraday volatility and session length.

- How do I choose parameters? Start with w=3, prominence=0.5–1% of price, tolerance=1%, then validate via walk-forward backtests.