Overview

n8n is a workflow automation tool often used to orchestrate AI inference, data enrichment, and ops tasks. This guide compares n8n Cloud vs self-hosting for AI Engineering use cases (including private models and internal data), and shows how to get started quickly.

TL;DR: When to pick which

| You need… | Choose Cloud | Choose Self-host |

|---|---|---|

| Fast setup, no ops | ✓ | |

| Full control over infra, VPC, air‑gapped | ✓ | |

| Strict data residency | ✓ | |

| Custom/community nodes without restriction | ✓ | |

| Managed updates/backups | ✓ | |

| Predictable monthly cost | ✓ | |

| Lowest unit cost at scale | ✓ | |

| Private LLM, on‑prem vector DB, internal APIs | ✓ | |

| Minimal vendor lock‑in | ✓ |

General rule: Start with Cloud to validate value quickly. Move to self-host when you need deeper control, privacy, or specialized scaling.

Feature comparison (AI-focused)

- Hosting and ops: Cloud is managed; self-host needs Docker/Kubernetes and your monitoring, backups, and updates.

- Data control: Self-host keeps all execution data, logs, and credentials in your environment; easier to meet residency needs.

- Connectivity: Self-host can reach private subnets and on‑prem services without exposing them; Cloud needs public ingress or secure tunnels.

- Extensibility: Both support nodes; self-host makes it easier to install custom/community nodes and native OS dependencies.

- Scaling: Cloud scales within plan limits. Self-host can scale horizontally (queue mode with Redis + multiple workers).

- Cost: Cloud = subscription per workspace/usage. Self-host = infra + ops time; cheaper per execution at high volume.

Quickstart

n8n Cloud

- Create a workspace and sign in.

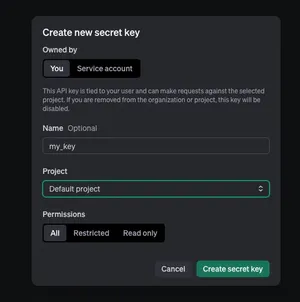

- Add credentials for any external APIs you’ll call (e.g., OpenAI, Slack).

- Build a workflow with a Webhook (or Schedule) trigger.

- Test your webhook URL from curl or a tool; publish when ready.

n8n Self-host (Docker Compose)

- Provision a VM or server with Docker and Docker Compose.

- Create a docker-compose.yml with n8n and Postgres (for persistence).

- Set environment variables (host, SSL, credentials encryption key).

- Bring up the stack and visit the UI.

Minimal compose file:

version: "3.9"

services:

postgres:

image: postgres:15

environment:

POSTGRES_USER: n8n

POSTGRES_PASSWORD: n8n

POSTGRES_DB: n8n

volumes:

- n8n-db:/var/lib/postgresql/data

n8n:

image: n8nio/n8n:latest

ports:

- "5678:5678"

environment:

N8N_HOST: localhost

N8N_PORT: 5678

N8N_PROTOCOL: http

DB_TYPE: postgresdb

DB_POSTGRESDB_HOST: postgres

DB_POSTGRESDB_DATABASE: n8n

DB_POSTGRESDB_USER: n8n

DB_POSTGRESDB_PASSWORD: n8n

N8N_ENCRYPTION_KEY: "replace-with-32+chars-random-key"

GENERIC_TIMEZONE: UTC

depends_on:

- postgres

volumes:

- n8n-data:/home/node/.n8n

volumes:

n8n-db:

n8n-data:

Start it:

docker compose up -d

Open http://localhost:5678 to finish setup.

Minimal working example: Webhook → local LLM (Ollama) → Response

This example accepts a prompt, calls a local LLM (Ollama at http://localhost:11434), and returns the model’s text. Import the workflow JSON below into n8n.

Assumptions:

- You have Ollama running:

ollama run llama3 - n8n is reachable at http://localhost:5678

Workflow JSON:

{

"name": "AI Webhook to Ollama",

"nodes": [

{

"parameters": {

"path": "ai/complete",

"methods": ["POST"],

"responseMode": "responseNode"

},

"type": "n8n-nodes-base.webhook",

"typeVersion": 1,

"position": [200, 300],

"name": "Webhook"

},

{

"parameters": {

"url": "http://host.docker.internal:11434/api/generate",

"method": "POST",

"jsonParameters": true,

"options": {

"timeout": 90000

},

"bodyParametersJson": "={\n \"model\": \"llama3\",\n \"prompt\": $json.prompt,\n \"stream\": false\n}"

},

"type": "n8n-nodes-base.httpRequest",

"typeVersion": 3,

"position": [500, 300],

"name": "HTTP Request"

},

{

"parameters": {

"responseBody": "={{$json.response}}",

"responseCode": 200

},

"type": "n8n-nodes-base.respondToWebhook",

"typeVersion": 1,

"position": [800, 300],

"name": "Respond"

}

],

"connections": {

"Webhook": {"main": [[{"node": "HTTP Request", "type": "main", "index": 0}]]},

"HTTP Request": {"main": [[{"node": "Respond", "type": "main", "index": 0}]]}

}

}

Test it:

curl -X POST http://localhost:5678/webhook/ai/complete \

-H 'Content-Type: application/json' \

-d '{"prompt":"Write a short haiku about n8n."}'

Notes:

- On Linux, replace host.docker.internal with your host IP.

- If using Cloud, replace the HTTP Request URL with a public LLM endpoint or a secure tunnel to your network.

Operational pitfalls to avoid

- Webhooks and SSL

- Cloud provides a public URL; for self-host, put n8n behind a reverse proxy (TLS), and set N8N_HOST/N8N_PROTOCOL correctly.

- Persistence

- Use Postgres (or MySQL) and persistent volumes. SQLite in ephemeral containers can lose data.

- Large payloads/binaries

- Configure binary data mode and storage location. Reverse proxies may need increased body size limits.

- Secrets management

- Always set N8N_ENCRYPTION_KEY. Scope API keys to minimum permissions. Consider external secret stores for self-host.

- Scaling workers

- For high throughput, enable queue mode with Redis and run separate workers. Don’t put heavy work on the main process.

- Timeouts and retries

- Tune node timeouts and retry settings, especially for LLM or vector DB calls that can be slow.

- Upgrades

- Cloud auto-updates. For self-host, test upgrades in staging; breaking changes can affect nodes or credentials.

Performance notes (AI workloads)

- Use queue mode for concurrency: set EXECUTIONS_MODE=queue, add Redis, start multiple workers. Scale workers horizontally.

- Keep main instance light: use it for scheduling/webhooks; push heavy jobs to workers.

- Tune concurrency: N8N_WORKER_CONCURRENCY and external service limits (e.g., LLM rate limits) should align.

- Batch where possible: pre-chunk prompts or documents to reduce per-item overhead.

- Avoid large inline JSON: store big artifacts in object storage and pass references.

- Short-circuit webhooks: use Respond to Webhook early, process async, then notify if needed.

- Observability: capture latency, error rate, token usage, and queue depth to spot bottlenecks.

Cost considerations

- Cloud: Simpler budgeting; you trade higher unit cost for zero ops.

- Self-host: Lower unit cost at scale, but factor in engineering time, monitoring, backups, and security hardening.

Compliance and licensing

- n8n is source-available; self-hosting is permitted. If you redistribute or build a competing hosted service, review the license terms.

- For regulated workloads, self-host simplifies data residency and private-network access; Cloud shifts some controls to the provider, but you still own data classification and usage policies.

Decision checklist

- Do you need private LLMs or internal databases? → Self-host.

- Is rapid validation more important than control? → Cloud.

- Expecting unpredictable spikes? → Self-host with queue workers.

- Limited ops bandwidth? → Cloud.

FAQ

Can I start on Cloud and migrate to self-host later? Yes. Export workflows/credentials (rotate secrets) and reconfigure endpoints. Test webhooks and queues in staging.

Can I call local LLMs from Cloud? Only if they’re reachable from the internet (or via a secure tunnel). Otherwise self-host.

Do community/custom nodes work on Cloud? Many do, but self-host offers maximum flexibility and OS-level dependencies.

How do I scale for heavy AI jobs? Use Redis queue mode, multiple workers, and early webhook responses; keep main instance thin.

What database should I use? Postgres is the common choice for reliability and performance.